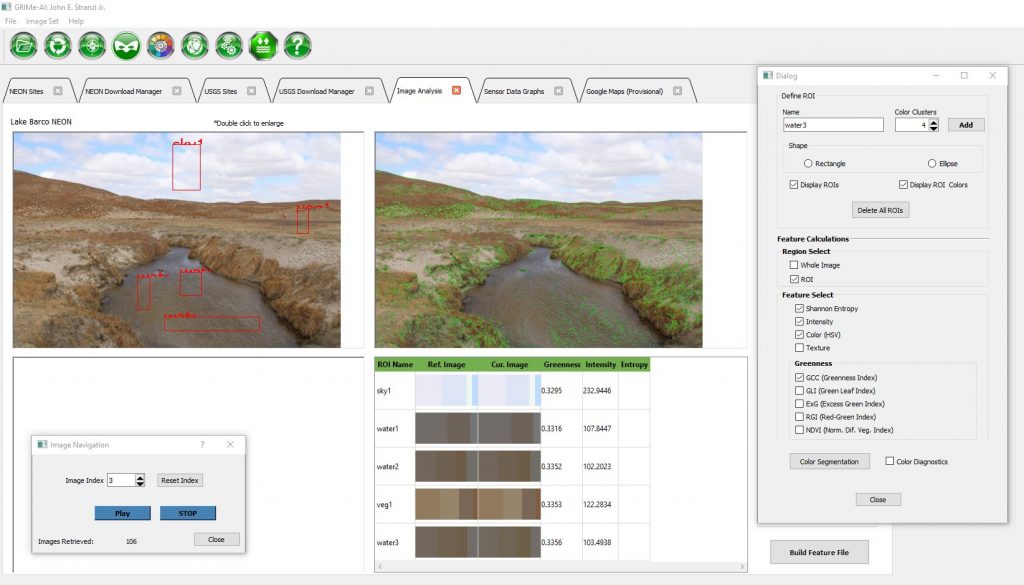

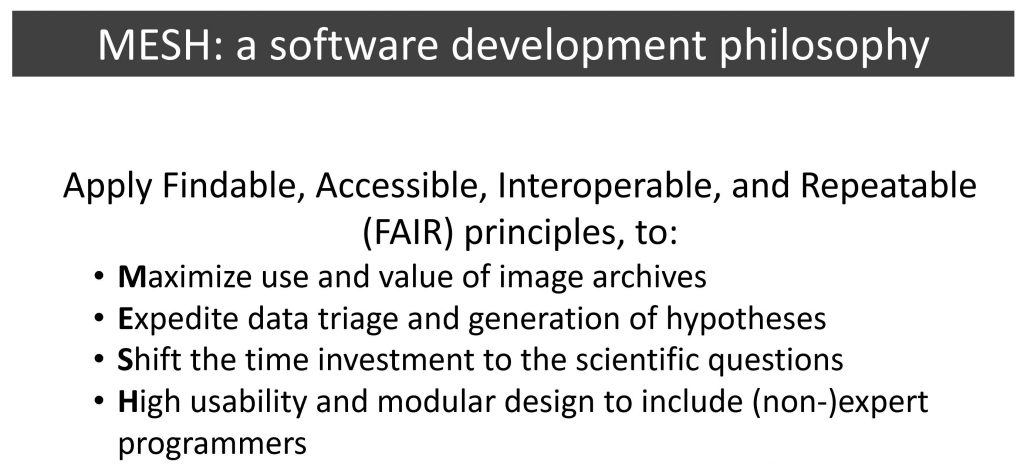

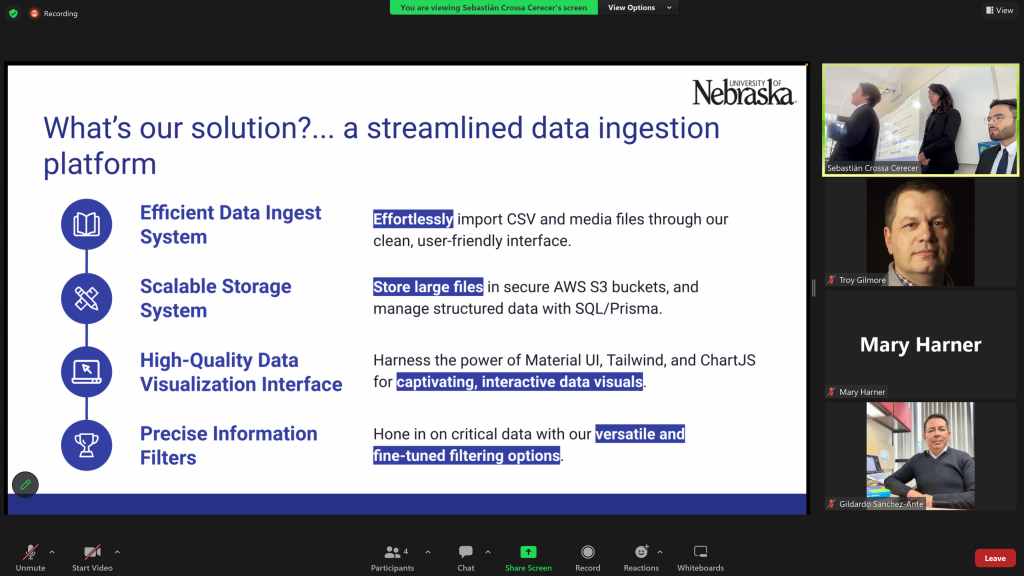

The project folder on my computer is labeled “2021_GRIME_AI.” The date is a reminder of time and energy invested in GRIME AI. Our motivation has always been to enable others to extract information from imagery and we’re thrilled to share this software that facilitates the entire data science workflow, from data acquisition to visualization/exploration to model building and deployment.

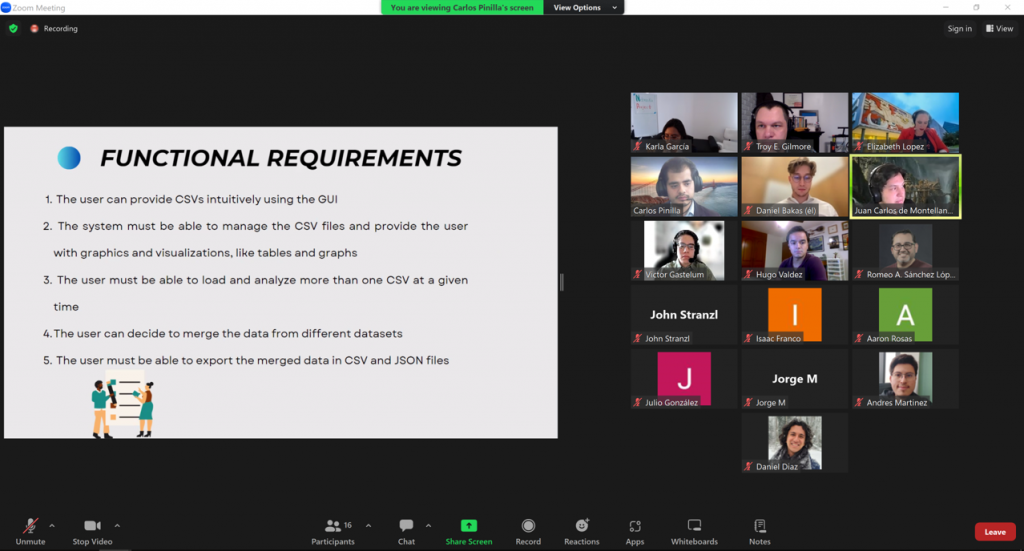

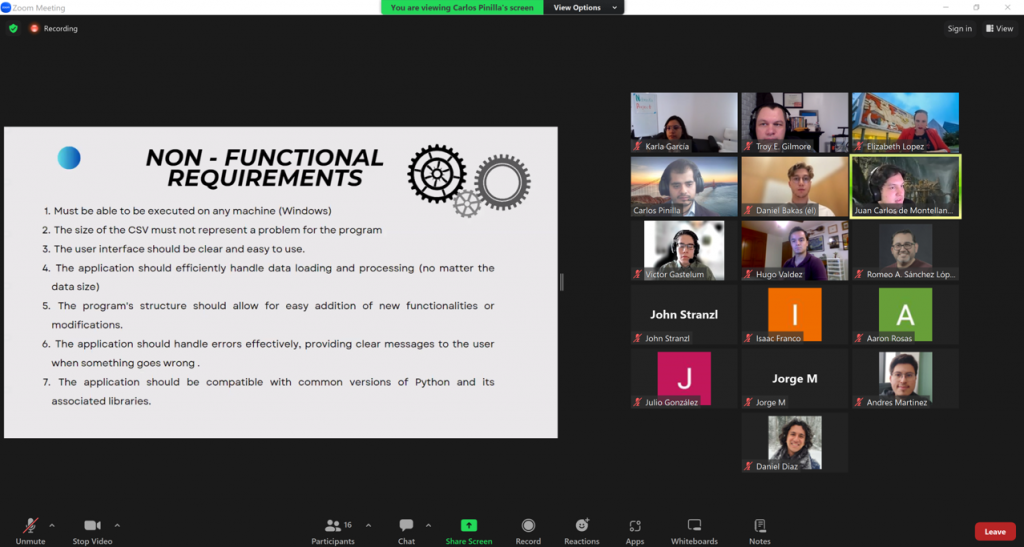

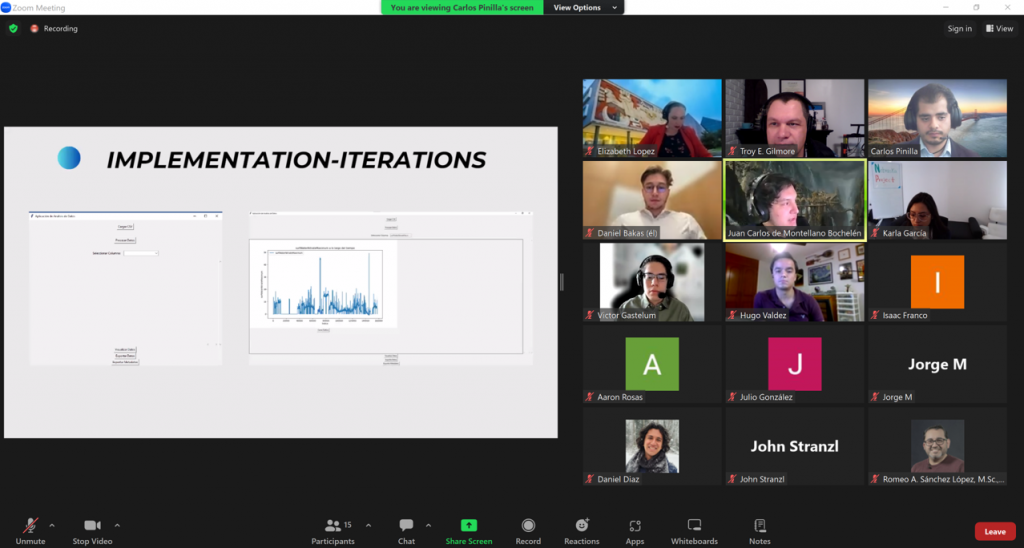

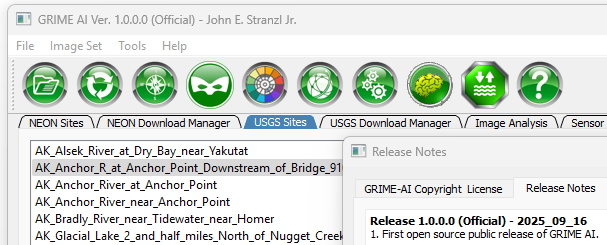

John Stranzl is the lead developer of GRIME AI. Including the prototype he brought to the GRIME Lab, he has written almost every line of code. He had the vision to create the complete data science workflow, from data acquisition to model deployment, in GRIME AI.

Special credit goes to Mary Harner at University of Nebraska at Kearney. Mary’s connection with the Platte Basin Timelapse project and depth of experience with image-based projects for science and communication were foundational for GRIME AI. Her mentorship skills are unparalleled and a benefit to many students who have participated in GRIME Lab projects.

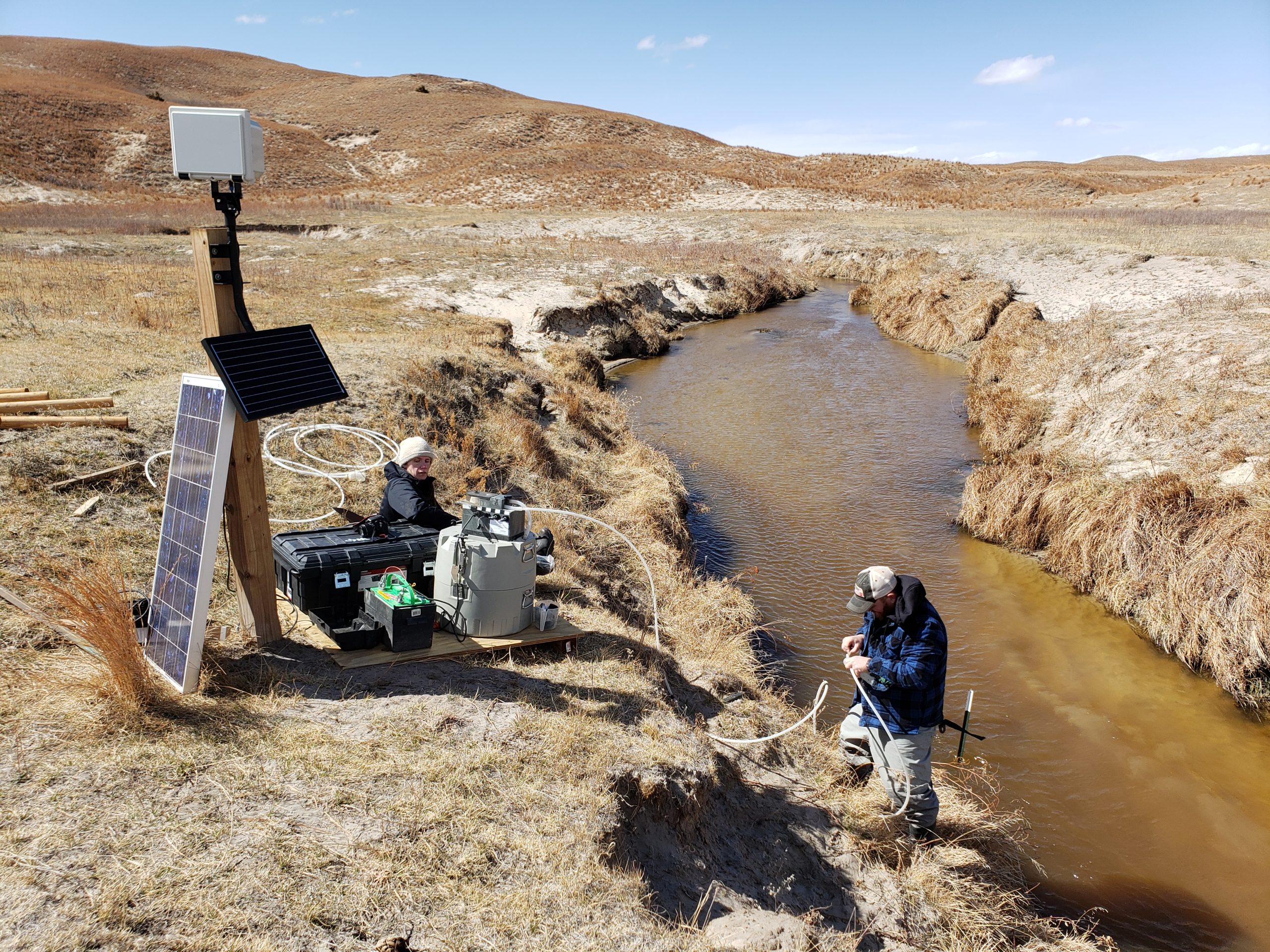

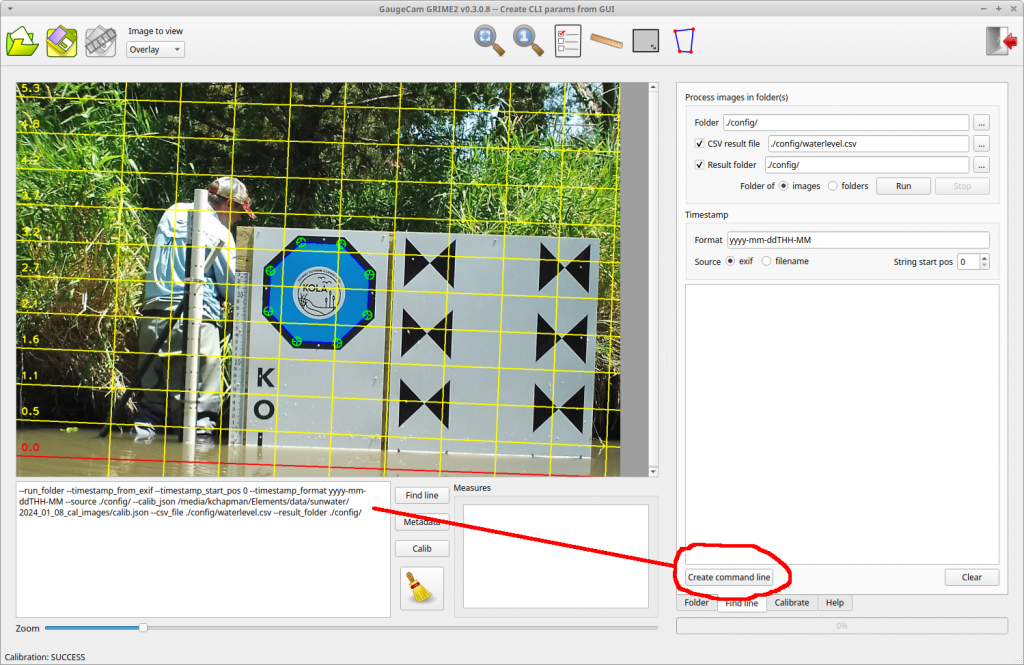

Ken Chapman, who developed the first GRIME software (GRIME2) and conducted the prototype study for GRIME AI workflow, deserves all kinds of credit as well. Without Ken’s relentless energy, expertise, and networking skills, we would never have connected with John or built GRIME AI.

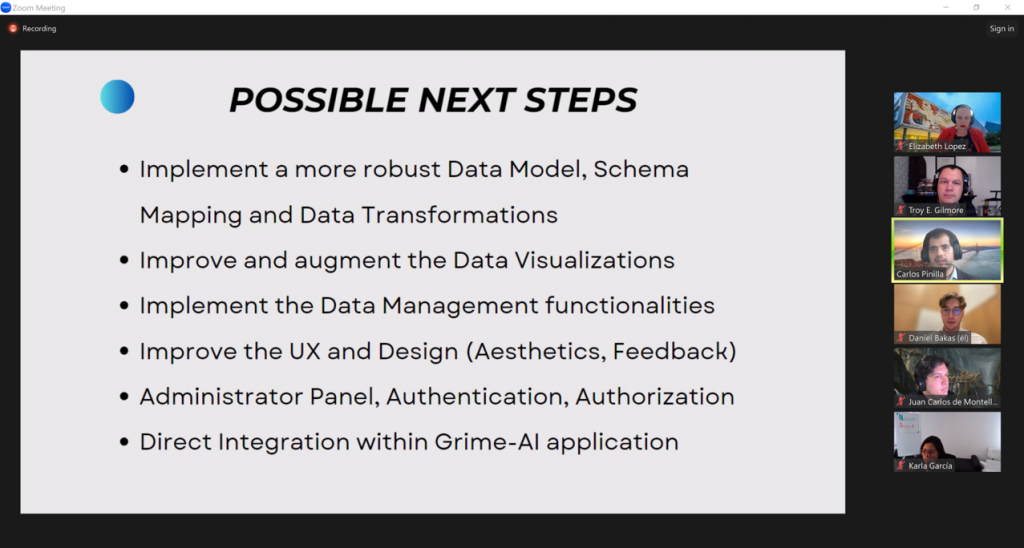

This is the first public release of GRIME AI. As early testers, we’ve encountered a few “undocumented features”—but our beta testing experience confirms that the benefits GRIME AI delivers far outweigh any reason to delay its debut. This marks the beginning of something much bigger, and we’re thrilled to finally share GRIME AI with the world.

Download the installer: Go.unl.edu/GRIMEAIUserForm

Visit the GRIME AI Wiki: Github.com/JohnStranzl/GRIME-AI/wiki

GitHub Repository: Github.com/JohnStranzl/GRIME-AI

We have been fortunate to have support, financial and moral, from like-minded individuals and agencies. Thank you to Frank Engel, Keegan Johnson, Russ Lotspeich for the opportunity to work with USGS. Thank you to Marty Briggs for connecting us. And to the National Science Foundation for funding and collaborator Andrew Richardson (NAU/PhenoCam) for joining us on this journey. We are truly “living the dream” when we can match exciting projects with great collaborators and humans.